This will be an occasional record of my work of my work with IG: UPX and the technologies and insights I find as I learn them. I’m not considering this as a formal journal; as I’ve said elsewhere I’m not going to be journalling any of my personal life on this site for several reasons.

However, the speed at which I’ve been learning as I begin my business journey has been pretty unexpectedly high and I felt it would be useful to document the journey, without disclosing any intellectual property or personal information.

The basics: I’m using IG: UPX but it is not my sole program. I run multiple ZP and non ZP along side one another, often at many times the recommended number of loops per week, recon has been minimal although it is difficult sometimes to separate recon from everyday tiredness and ordinary bodily inflammation/muscular tension and ordinary self doubt that comes up from time to time.

The actions I’m taking at the moment are preparatory work towards the program I’ll be working on first for sale on a VR app store or two, which I’m code naming Alexandria. I will not be discussing the purpose of this app here or its USPs; I don’t need any unwanted competition in the market.

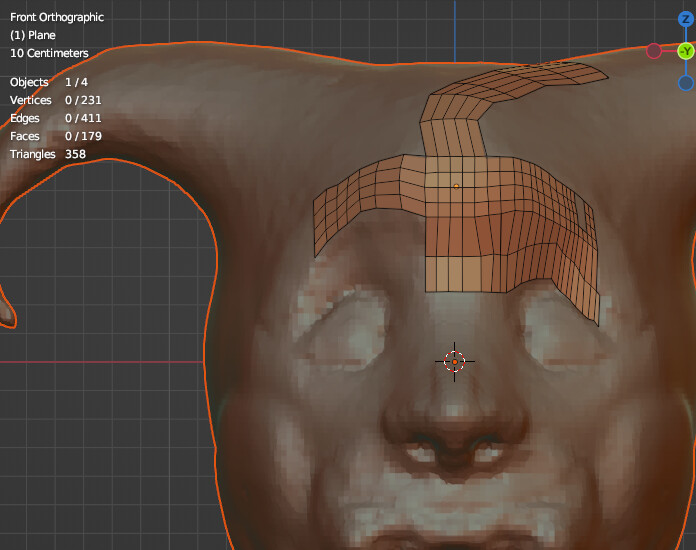

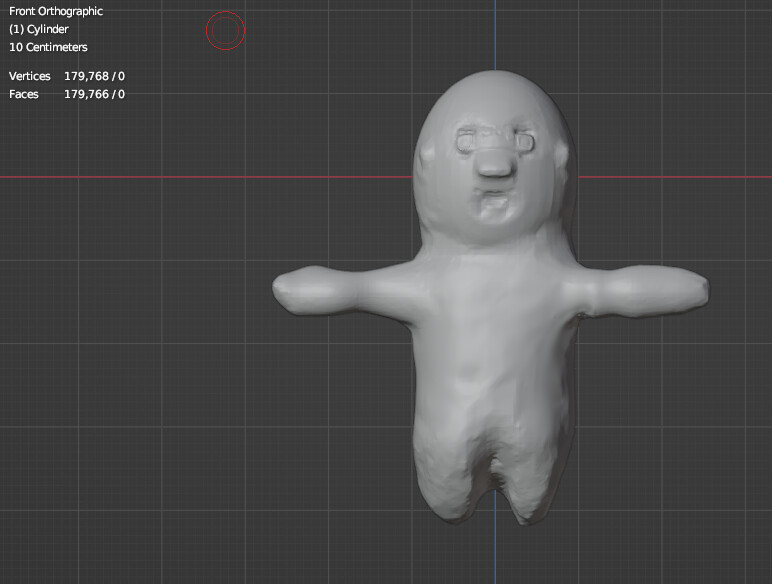

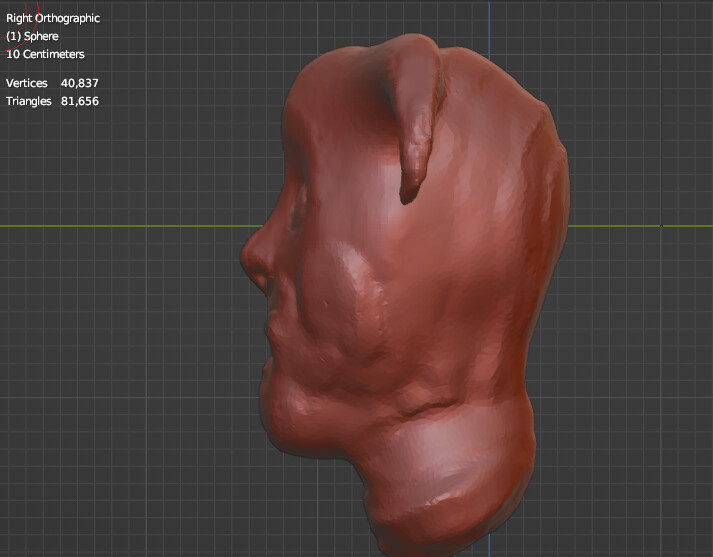

Building a VR app, game or non game, is a pretty involved process. You need VR assets with a poly count appropriate for the platforms you are developing for; you need to understand the limitations of the platform your app will run on; you need to understand how to cross compile the app on your development machine and deploy it remotely to your headset for testing, and know what Platform specific (eg Android) or VR features its going to rely on like head or hand tracking. You need to consider audio cues; you need to consider your engine you’ll be using to interact with the Vulkan or OpenXR API, and so on.

So far my determination has been that game development and ordinary app development in VR are quite similar work flows, except with most apps you won’t have to be quite so concerned about how performant your scene is going to be since you won’t have potentially hundreds of NPC characters to render at once. But in both cases, you need a game engine to generate the virtual world and interact with it in a realistic physics driven way.

I’ll update this periodically with details of the technologies 'm learning about and my thoughts on what I’m learning and more specifically the speed at which I’m acquiring new knowledge.

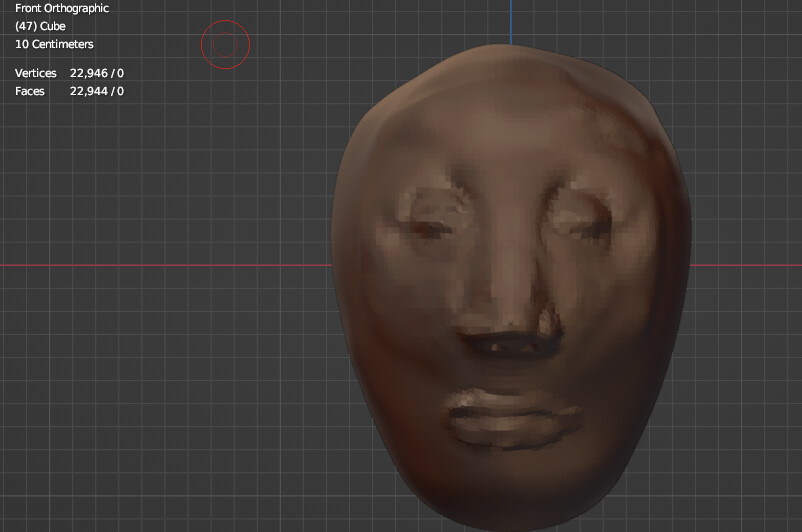

and the chin and lips and cheekbones took the longest time to figure out.

and the chin and lips and cheekbones took the longest time to figure out.

i meant no offense good sir.

i meant no offense good sir.