You don’t fold the singularity around yourself in space, as space is the point of cohesion for both particle and anti particle. A two dimensional Mobius strip must be folded in 3 dimensions in order to create the double sided singularity. A 3 dimensional Mobius strip must be folded in the fourth dimension, and so on. That means universe and anti-universe fold upon the higher dimensional zero point which can only be achieved by consciousness itself.

FInished the day with one missing library causing the crate to not compile. I adb pulled the lib64 folder from the device, perhaps not the best choice, and pointed the compiler to the folder which got rid of the missing library warnings for libc, libm and so on. Unfortunately it looks like on the current Android version the Quest is running, libunwind has been replaced by libunwindstack, and maybe its the lack of coffee, but I just can’t figure out how to tell the compiler to use unwindstack instead, or whether that’s even the appropriate thing to do. Will look at it with fresh eyes tomorrow.

How would that be done?

What experiment(s) would be required to prove this?

This is interesting.

Would you say that UPX had something to do with you coming to this realization?

If I’m understanding what he’s suggesting correctly, the experiment is the fact that you exist, you think, you self-reflect and you manifest through sheer will and the shaping of your own consciousness.

UPX and a whole bunch of other factors and pieces of information. It still needs further thought/meditation to fully cohere. Oddly the movie Displacement provided some inspiration. As Wikipedia points out (and some people replying to Matt Srassler in his article about anti particles), photons are very strange beasts. “Some particles, such as the photon, are their own antiparticle”, due to it being electrically neutral. There is a very good discussion of the anti-matter travelling backward through time idea here, but as usual the comments are much more interesting than the purported explanation itself. The idea that the movie was based on was the idea that photons can communicate backward in time due to being their own anti-particle.

However, taking that idea further, conservation of energy is really the principle that causes symmetry to be required. QFT is built on more assumptions than a beach has grains of sand, one of these is the idea of lines of force, another is mathematical symmetry. One question that naturally arises from consideration of QFT is how can symmetry be maintained in the face of discrete, quantized levels of energy that particles can have? Much as people might find it funny to believe we are part of a computer simulation, this kind of pixelisation is a symptom of a larger problem in QFT, a “missing piece” if you will.

I believe topology holds part of the answer (and there are actual physicists rather than computer programmers like myself who have thought the same, just think about TGD), and mobius loops are one of the most interesting of these – a single path from a double sided material resulting from a singularity point (where the two flipped edges meet). Space and the objects in it appear to follow the rules of symmetry and coherence, but consciousness itself exists outside of these 3 dimensions, and seems to interact with the world through light/photons and magnetic fields. I can’t provide a straight scientific explanation yet as to why I make this assumption, but it does come from a visualisation of the processes involved. Maybe the Minds Eye comp in IGX and my many runs of QL are involved

Continuing to document the compilation journey.

Confirmed the reason for the problems that occurred with not finding -lunwind is likely breaking changes in the toolchain post 1.67, which means I need to pin the toolchain in order for it to compile properly. This caused me to decide to read the cargo book cover to cover and read more of the rust book itself, to ensure I can better handle any further compilation issues and know what I can and can’t do when using this library currently targeting an old version of the Android API. I need to better understand the processes of cross compilation especially as it relates to Android and the NDK.

So far haven’t retried the compilation with the old toolchain, gonna try that before putting this to bed for the night. Also been downloading documentation of the Android API level changes in case of internet outage so I can work on trying to update the library to be compatible with the newer API releases. The changes from v22 up to 32 would appear to cover enough A4 pages for a small novel. No wonder the developer has been slow on updating the code, the process of understanding what breaking changes exist looks painful because you have to read the entire set of behavior changes across about 5 different Android versions. It’s a wonder the thing still compiles at all, I suppose that speaks to Android’s backward compatibility in its API and how much of a beast it is.

EDIT: Library and examples compiled successfully, now I just have to wrestle with the APK packaging command tomorrow and adb installing it to the device for testing. With any luck I should have a working app tomorrow. Then the fun of documenting what I did and beginning to tweak this to create my own apps actually begins.

Well, that’s a wrap on getting the first simple scene to compile and run in headset. gonna try the other examples and compile them into APK, then try writing my own code over the next week.

Main problem I had with the APK was the wrong version of the Android API, I was getting confused with versioning between NDK and SDK, not realizing the two are completely arbitrary and unrelated. NDK 22 and SDK 28 together did the trick, once I found the texture files I had missed downloading. Also got to learn how to use adb logcat properly to find where the damned thing ™ was failing. So now I can relax for the rest of the day and let all of this knowledge percolate in my brain.

I think IGX helped in getting it going. Although recon wise, definitely recognising the limitations of my current dev environment and hoping I can get that sorted real soon  Still, compared to compiling X Windows on an old 586 back in my Uni days, relatively painless.

Still, compared to compiling X Windows on an old 586 back in my Uni days, relatively painless.

Artificial intelligence can be a pain when it takes over your IDE.

Android Studio has automatic type hinting which seems to be on by default. I was reading through the code for crab saber, and the engine for Android Studio inserts a type hint with a question mark after it, like “&mut?”. This is really confusing, as the question mark operator has a special meaning in Rust. I spent a good fifteen minutes of my life that I’m never getting back trying to google what was the meaning of an &mut? when no such type actually exists. Turned that garbage off with prejudice. I don’t need no stinking IDE telling me what it thinks my typing should be.

Also, Chat GPT continues to be next to useless when it comes to code samples. Asking it about reading assets from an APK file, it tried to insist on using JNI and the horrible Java function name mangling to read assets using Java types, rather than providing me an example of using ndk::asset with AAsetManager and AAset to read the assets in.

Having considered things thoroughly, it makes sense to make good use of the asset manager rather than storing all the textures and models within the ELF. As long as good memory management is followed there shouldn’t be any big performance hit.

As I read over this code base I’m beginning to see where the difficulty has arisen in stabilizing an API around VR. There really is still a distinct lack of standardization despite the openxr standard in some areas. In some ways this is a good thing as it can lead to optimisations and preferred ways of doing things, but there have been some things that I’ve been surprised by.

Two inter-related things that have surprised me when looking into Vulkan and Open XR is that I’ve found very little material on mixing traditional 3 dimensional content with 2 dimensional content and especially text or graphical user interfaces.

For example, the library I’m using at the moment effectively takes an approach of converting the 2 dimensional content of the GUI into something that will fit on a mesh, and uses colliders to determine if a button or field is hovered. Which makes sense for a lower level API but effectively means a lot more work to evolve a working GUI.

The other problem, drawing text, involves converting what is effectively the vector art for the different glyphs, scaling them appropriately, and so on. Then what happens if you want to change that text?

From what I can tell, the best way of representing text in VR is going to have to be something like a series of mesh objects which contain the corresponding glyph and textual representation, and this is basically what I’ve seen described at this URL. I’m not sure if things have improved since 2020 significantly and if it has its not clear online. But then I have not yet read through the 3.5MB OpenXR API specification or any of the multiple books that have been written on the subject yet. I really want to find out how other popular apps do text overlays as WebXR seems to be able to handle it with ease,

At least all of these technical problems give me plenty of material to use when creating a social media presence for VR developers in the future.

I don’t know if it’s IGX or QL working but I’m finally understanding the reason for all these pointer types in Rust and why Arc is so important. I was just reading the definitions for the Sync and Send traits and it’s all starting to come together.

To share data across thread boundaries, pointers need to be cloned. For reference counted types like Rc<T>, there arises the possibility of a race condition where two threads attempt to update the reference count to a variable simultaneously, creating an unspecified behavior. To get around this, the Send trait is only automatically implemented when the compiler determines it is appropriate, which it is not for Rc<T>. Similarly, Sync is only implemented if <T> is Send, Sync being for types where it is safe to share references between threads.

The consequence of this is that you can only create an Arc<T> for types where T is both Sync and Send, which means any types with interior mutability in a non thread safe form cannot be encapsulated within an Arc.

In this way the compiler is able to enforce restricting non thread safe mutability to a single thread, so that race conditions do not arise.

There is also more to it. Standard types like i64 or u8 implement Clone/Copy and are typically cloned where needed so that the same memory location is not being updated twice in two places at once. This is why typically you can only have one mutable reference to a struct. However, Rust also implements atomic versions of the primitive types, so that they can be safely encapsulated within an Arc and shared between threads while avoiding undefined behavior.

It really is a brilliant design, and its implication is that once you wrap your head around the reasons for Rust’s type design, you can write thread safe code with confidence that you will not introduce any race conditions into your code, because it is clear when code or data is safe or unsafe.

Emacs for productivity and mathematical bruhaha

I haven’t been as productive in the way of code today as I wanted to be, but learning new things, there’s been plenty. I decided I was tired of using Android Studio to view the Rust code for Hotham, that thing is more bloated than my intestines

I have Cygwin64 installed on my PC with Emacs 28, so I opened the code up with that to have a look and starting googling up material for whether there was a major mode for rust. Of course there is. Then I ended up learning that the windowed version of Emacs can use different themes - light, dark, deeper-blue, wheatgrass etc. That was nice because I much prefer dark mode (less bugs, lol… bad programmer joke)

Then while reviewing keybindings for the Rust major mode I discovered Emacs has a calculator built in. But not just any calculator. One that can work with complex numbers, vectors and matrices. I can define an affine transformation matrix and easily calculate the inverse of that affine matrix with a single key! Furthermore, you can calculate inverse and determinant etc even for matrices where the values are formulas like x^2 or a+b! You can also solve algebraic equations, do differentiation or integration, etc…

Even better, after a little jiggery pokery installing gnuplot and gnuplot-x11 and the xinit package in Cygwin64, I could run emacs through an xterm in Windows 10 and plot 2 and 3 dimensional graphs of an equation of my choice directly from within the calculator.

After I got over how awesome the inbuilt calculator was, I watched some videos on org mode. What this taught me is that I can create my own business process manual using Org mode and fill it with different levels of hierarchical headings, and then include code blocks for specific design patterns to search out and even run directly from within Emacs (not that I would want to run it from within Emacs). As well as doing basic project management and todo lists using org-schedule.

So workflow wise, after compiling the appropriate library docs, I can copy these to a separate folder and create links to them within an org mode file for quick reference to module documentation without having to run cargo docs or run any fancy IDE.

There’s also org mode addons for other things and an Emacs Gmail add on, but thats probably going a bit too far.

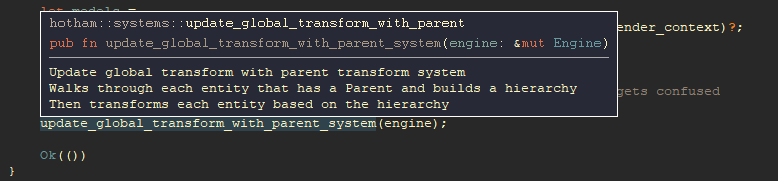

EDIT: Finally got LSP support / syntax highlighting and code completion working in Emacs! Apparently lsp-mode does not like running in Cygwin because /cygdrive/d is not a real windows path, so when rust-analyzer is started it fails in attempting to pass the path to the source code to the tool. Once I installed 28.2 Emacs for pure Windows and updated my .emacs to add the MELPA repository, rust-mode and lsp-mode installed like a charm along with other MELPA .el’s. I can now read function documentation for not only the standard library but hotham itself. To make it work I needed to add my test package into the workspace and ensure the Rust edition was correctly set.

This should make development a hell of a lot easier.

Additional Emacs magic for development

So I found a few additional things to make life easier for Rust developers. In Emacs, installing company and then enabling company-mode on the buffer is what enables code completion by default in lsp-mode. The code completion is so much quicker and less obtrusive than typical code completion in Android Studio/JetBrains IntelliJ and displays the full method signature for each potential match.

Then, there’s tab-line-mode which basically turns your buffer into one where you can switch between multiple files in the same buffer without needing to use a hotkey, pretty much like what you would expect in a normal text editor or IDE. And then you can configure entire window configurations with tab-mode itself and swtch between them.

I have winner-mode and desktop-save-mode enabled by default with backup files going to a Trash folder and the MELPA repository for add-ons set up by default, and finally a couple of custom faces to fontify the tab-line.

The best thing about this configuration is that it is using less than 160MB of RAM at peak working set, whereas Android studio’s peak working set for just having a few rust files open was 1.6GB - 10 times as much memory to do basically the same thing!

I can have a custom shell running in another buffer to run build commands and then cut and paste pre-defined build scripts from an Org mode document for different configurations and be just as efficient as if I was working in Studio. The only thing it doesn’t do is let you emulate an Android Device and debug your code line by line, but… why the hell would you want to do that for a VR app anyway? Its simpler to just set up a custom build script to adb install the resulting apk file to your device so that when the build buffer stops scrolling you can just chuck your headset on and beta test.

So I think with IGX I’m starting to recover some of my old workflow and hackable operating system ethic. I had someone this morning try to sell me on using WSL2 and ditching Cygwin, but I passed on that with prejudice. There should be no favoritism with tools, the questions should really be:

- Are they fit for purpose?

- How efficient are they?

- What is the licensing and how configurable are they?

- What are their limitations?

- Can those limitations be worked around?

I credit IGX with helping me track down what limitation of Cygwin was causing rust-analyzer to choke, and understand what situations Cygwin should and shouldn’t be used in the development life cycle. As well as pushing me to look in the direction of more efficiency. Just because an IDE is the industry standard doesn’t make it a good fit for everyone’s needs.

OK, now the recon begins. As I start to write code, the struggle to understand proper scoping and the contrived coding examples in the documentation to get a working code base using non deprecated functions.

My compiler is looking at me like “wtf are you doing?” The number of errors multiplies and I think “should I just rename this entire readassets module to android and pass the AttachGuard around in a struct to avoid having to return all these messy jni objects?”

Rust warned me that ndk_glue::native_activity was deprecated (probably due to the API changes post Lollipop) and tells me to use ndk_context::android_context().vm() instead. Problem is, this is BS. The documentation explains that vm() returns a raw pointer that needs to be cast and passed to the implementor function for jni::JavaVM instead. So far, fair enough. Then the vm must be attached to the current thread and AssetManager then needs to be found via find_class(), which returns a jni::errors::Result type, which then needs to be checked for errors before the Result<JClass<'local>> is returned.

All of a sudden I’m thinking “this is so ugly! why do they need to make it so difficult?” Head hurting because I smoked too many cigarettes today stressing over how to write this code to interface with AssetManager, going between wanting to write my own android module to interface with it just to teach me and show me I can beat the machine, vs caving and just downloading an external crate to do it faster.

The confusion around the need to use the JNI ended up getting resolved pretty quickly after I decided to take a look at the code and find out where this deprecation warning was coming from. Apparently version 0.6.2 of ndk_glue deprecated native_activity in favor of the horrible interface mentioned due to some kind of race condition. However, that deprecation warning was then removed in 0.7.0 before the library ceased active development.

Because the library I was using had 0.6 linked as the dependency semver, it wasn’t using 0.7 and the warning pops up causing confusion. However, 0.7 is part of the same major version and since the subversions are meant to be used only for non breaking API changes, I should be able to use 0.7 in my proof of concept and have the library compile without issues.

It seems ndk_glue is now deprecated in favor of android-activity, however the current stable version of the game library I’m using is still updating the library to use this new paradigm which involves some changes to the polling code. So the takeaway seems to be: I don’t need to use jni (Java Native Interface), thank God! But I do need to abstract the android specific code such as reading in glb buffers into my own implementation which can be changed later on when android-activity starts being used.

Not much to report today but more of a summary of where I’m at so far.

- I have a meeting with a mentor on Monday to be accountable for my first month of operations and I’ve been writing a brief summary for myself to make presenting easier.

- In doing so I’m coming to grips with just how much I’ve accomplished in one month of still reasonably unfocused activity. I’ve reached a passable level of skill in Blender, I’ve learned enough Rust to fully grasp the way forward to writing good code with it, I’ve selected libraries and system architectures appropriate to my project, and I know what work I need to do and what aspects of my personality I need to improve to achieve a successful proof of concept by the end of month two.

- In terms of other concrete accomplishments, I consider getting Hotham compiled and understanding the basics of open XR as well as customising the hell out of my Emacs as an IDE to be pretty substantial. I have enough accomplishments and material that was very hard to come by online that I could set up a unique channel for programmers on Youtube or even create and sell 3D assets for money if I had the urge to support the ongoing development process.

I also discovered a rather unique method of creating characters with good topology that was explained by Joey Carlino on his Youtube channel. I still need to get proficient with it, but it should speed up character creation workflow significantly and aid in the creation of props as well. Basically it involves clever use of loop cuts, insetting and beveling to create a base topology for faces or other objects that you can then build upon afterwards with sculpting tools and multires. Also, Looptools seems to be incredibly handy.

In terms of the insights I mentioned elsewhere from KB, I can see what I need to work on to super-charge my workflow. I almost did another post on my old Black journal about the topic before realising I was rambling more to myself than to the other forum members. So to summarise: the lessons of the carpenter in terms of suffering but enduring and mastery definitely apply here, as well as efficient use of emotional energy and one pointed concentration. There is definitely non physical interference that could nudge me in the wrong direction if my mind does not remain laser focused, and it is way to easy to waste time on frivolous stuff that doesn’t get me closer to my project goals. Things that at the time seem horribly important, but really aren’t.

The way to steer away from that external influence has been made clear to me and given me confirmation that I am on the right path with the project and its apparently secondary, secretly primary goals. I failed once in starting a business back in NZ partly because it was my own fault, partly because of those external influences making it easy for me to make stupid mistakes. Determined not to make those same mistakes again.

I trust ChatGPT right now about as far as I could throw it, and that’s not far.

I swear the thing tries to be deliberately misleading when providing code samples. I was encountering problems due to ownership issues in Rust. First I was trying to return an &[u8] from a function which gets the &[u8] from asset.get_buffer(). Rust correctly told me that the buffer dies when the function returns, and so I needed to convert the buffer to a Vec which stores the buffer on the heap and returns the smart pointer to the allocated memory.

I was needing to use the contents of those vectors and push them as &[u8] onto a Vec<&[u8]>. I was getting scoping errors because the Vec returned by android_helper.read_asset_as_bytes(&asset) only lasted as long as the for loop’s scope. It was ChatGPT’s buggy code that resulted in the scoping error in the first place due to not understanding that the Vec<&[u8]> needed to be used later down the line.

When I pointed out the errors in its code, it apologised, and then gave me the “corrected” code which still declared the buffer of Vec<&[u8]>'s within the scope of the loop!!

When I pointed this out to it, it apologised again, and gave me some different non working code. This time it tried to push a reference to a Vec<&[u8]> into a buffer declared before the for loop, which would have again failed after the returned value left scope.

I gave up in anger and decided to write the damned code myself. What absolutely gobsmacks me, the utter utterness of it, is that for other people querying the GPT, it gives them good code. So what is with it seeming to selectively mislead people?? It’s a simple problem, a scoping problem, why would it continuously return bad code time and time again only to me? It clearly understood the nature of the problem, and yet returned something that subtly looked right, but wasn’t, every time.

A successful first in person meeting with my mentor in that den of iniquity that is the city centre, and now I’m back home enjoying a quiet glass of something to loosen the tongue and the spirits, the first in several months, just to celebrate the fact that I don’t have to be accountable to anyone but myself and my landlord for the next month or so.

Last night I got my code working for reading in the assets from the APK, now I just need to figure out how to pack the assets into the APK with the correct path and test it to make sure its working. Then I can do some work on basic navigation and visual/audio cues and figure out setting a skybox or some kind of virtual environment up.

For the rest of today I don’t really want to focus on code, it’s too much brain drain after a day in the city seeing the bruthas out on the streets and the way my sacred trees in the gardens are looking, all their leaves gone. I know its winter here, but still it feels kind of symbolic in a way of the way that the world has gone (but also the promise of new life around the corner).

I’ve thought about maybe getting back into writing my novel; I’m sure it would go down real well with the types of people who are common in the world today, and I might even be able to sneak in a few life lessons for them in the process. The story of Damien Greene feels just as relevant now as it did back in 2018 when I first penned those heartbreaking words “Every day is now when your memory’s like mine”. And I’m sure the character of Sissy would be well received by the Minnesota community, it was almost prophetic in a way.

So tonight I might watch some old Babylon 5 reruns and just chill, and get back into the work tomorrow. I have a nice meaty sermon by an old exorcist I want to get in too, just to balance the pot. Let’s see how the evening unfolds…

Also just for fun

![Interview with an Emacs Enthusiast in 2023 [Colorized]](https://img.youtube.com/vi/urcL86UpqZc/hqdefault.jpg)