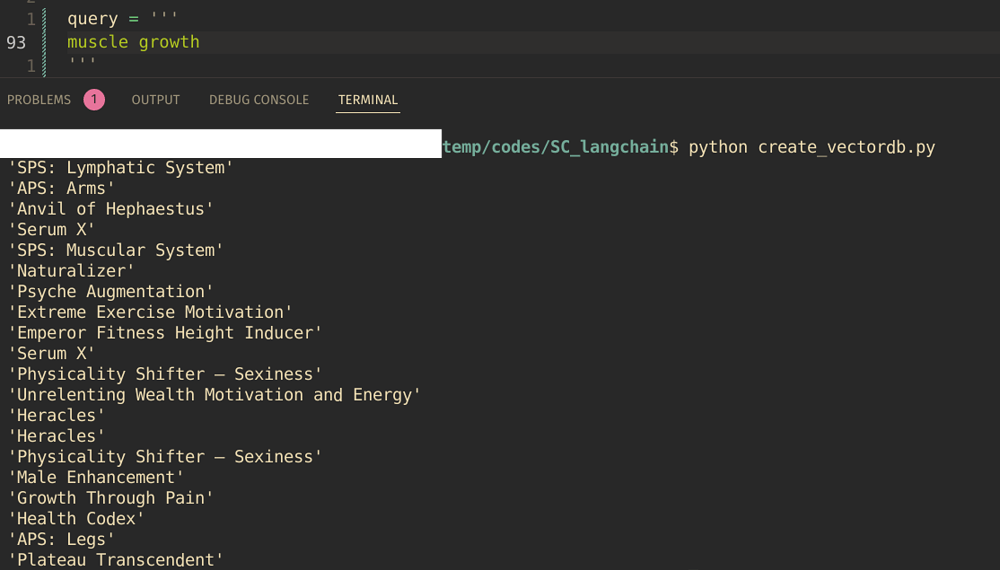

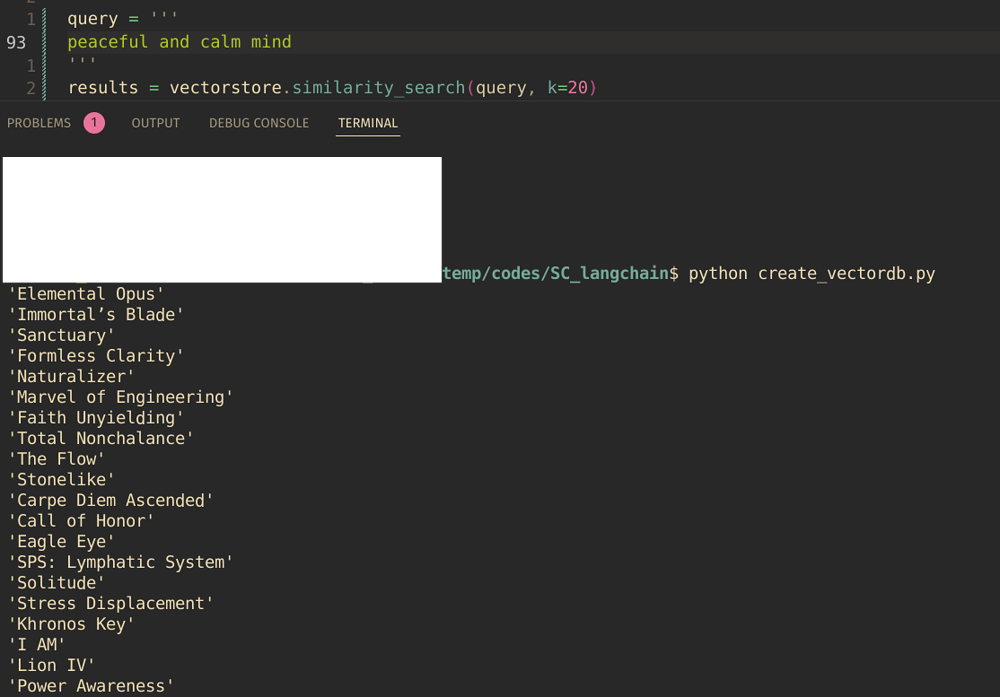

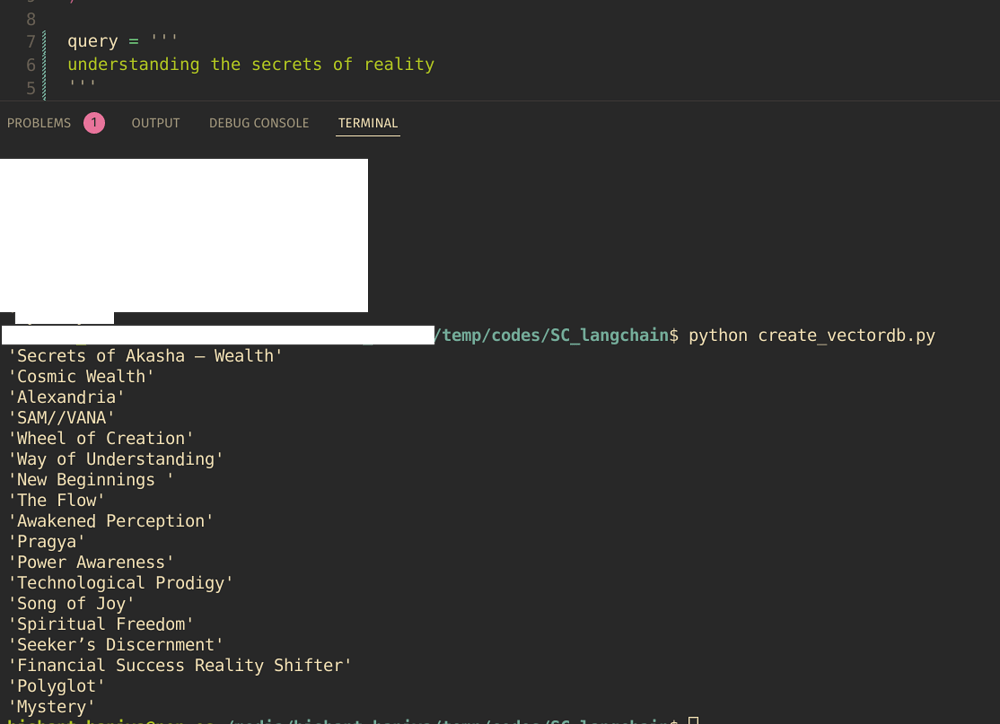

Yeah and its a really good start, technically you don’t even need to go to the level of LLM to have something useful if you have enough pre-trained word embeddings that catch all variations.

That’s basically what Pine Cone is doing, word embeddings are vectors which result from transforming text into vectors and then doing dimensional reduction on them through a neural network with CNNs/LSTMs. They’re kind of a side effect, a very useful side effect that is a big part of what allows NLP to work in the way that it does. Word vectors store not just a representation of the concept but its context within the corpus it was trained on, and that is what allows you do virtually do mathematical operations to determine similarity or relationship to other words.

Of course in order to have enough context to work with the corpus it is trained on needs to be fairly large. It’s part of the reason I haven’t worked with NLP much yet in my own experimentation; to get enough of a corpus in a form you can use you normally have to get into stuff like web scraping and cleaning the data you use, removing punctuation, optional stemming and all that good stuff

The thing that makes using word embeddings somewhat slow is that in order to search for similar terms, you basically get the dot product of two vectors, and if the product approaches the zero vector, the words are similar (EDIT: I got that mixed up, sorry, see Dot product for similarity in word to vector computation in NLP - Data Science Stack Exchange). Doing that across an entire set of word embeddings would be slow, and limited by the resources you have available to iterate over the DataFrame of your vocabulary of word embeddings. And word embeddings of multiple words length causes your vocabulary to grow really quickly, if you’re trying to train word embeddings for multiple word phrases.

That being said, I’m sure someone has probably already come up with a solution to that scalability problem. If I was to try and attack the problem myself, I’d probably try to look at what VQGAN did to solve the scalability issue with image generation. Similar principles would probably apply, maybe even the literature for LLMs might have something.

Good luck playing with it further! It’s certainly an interesting problem to tackle.

EDIT again: It seems what gives Pinecone and other vector databases the ability to search similarity efficiently is a ranking system based on nearest neighbour, basically very similar to pre-computing a bunch of vector products and sorting them based on the computed proximity to one another, in terms of their direction/normal. It shouldn’t be too difficult to store items that way in a traditional database; but vector databases are sure handy for cutting out a lot of the compute time!