Today was supposed to be a day off, but after I got my obligations outside of the house dealt with I ended up writing more code anyway.

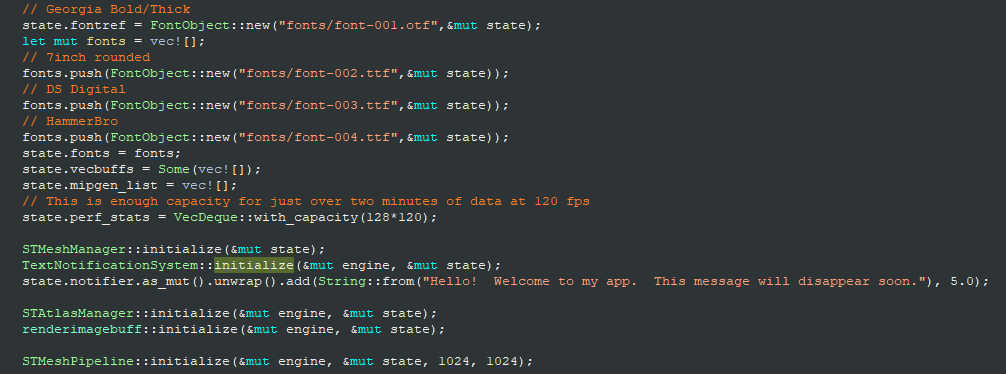

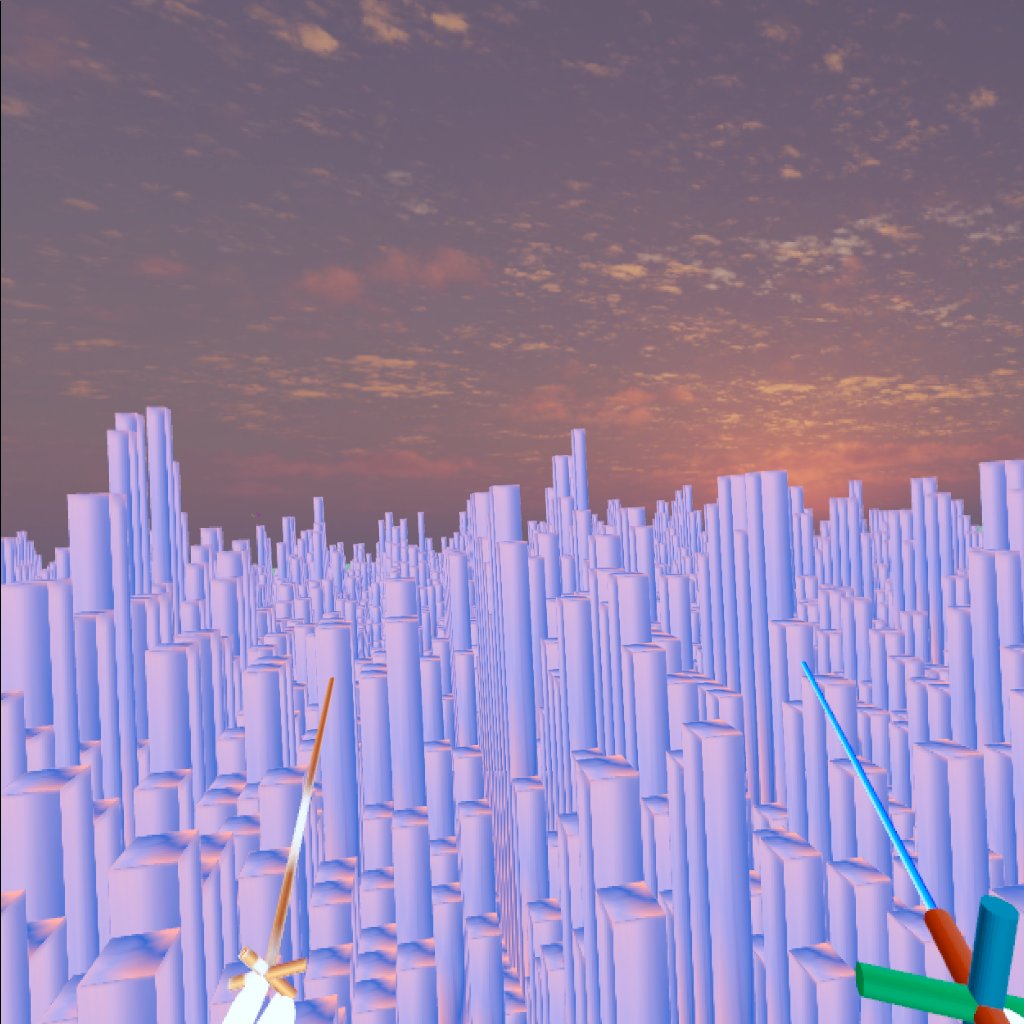

One thing I’m going to need to do in writing my program with a limited sized vertex/position/index buffer, is write code to manipulate the buffers in order to re-use the slots I’m done with. That is an interesting task, thanks to the way the engine is designed. Every Mesh has an arena_id::Id, and as it turns out the library has no way to delete such an id. So the meshes remain in the arena.

However, a Mesh is composed of a vector of primitives, and each primitive then stores its index buffer offset, the number of indices, the vertex buffer offset… but not the vertex count! So I have to store the vertex count for these special created meshes that I’ll be re-using in the hecs::World as a component, and then when it comes time to destroy the existing graph and create a new one, I copy all primitives backwards by the combined number of slots for the primitives I’m deleting, decrease the recorded buffer length, and then update those primitives with new vertex and index buffer offsets for each associated mesh.

Similarly, floating text drawn in the air or on the ground is composed of a series of quad meshes. Rather than delete them (because I can’t  ), the idea is going to be to mark each quad mesh that I’m drawing with an indicator of its orientation (xy, xz or yz) and make each mesh I’m done with invisible, then push its particulars into a buffer. Then when drawing text in mid air not connected to an existing texture buffer, I make a dedicated function to re-use any meshes that are marked as safe to re-use.

), the idea is going to be to mark each quad mesh that I’m drawing with an indicator of its orientation (xy, xz or yz) and make each mesh I’m done with invisible, then push its particulars into a buffer. Then when drawing text in mid air not connected to an existing texture buffer, I make a dedicated function to re-use any meshes that are marked as safe to re-use.

With these things in mind, the only other thing I need to do is be careful about the ordering I create my meshes in, so that there is a minimum number of memory copies to replace or re-use stuff. I’m thinking I reorganize my code to move the creation of the axes and other shit to the start of the graphing logic, so that the gigantoid meshes for the bars or other plotting primitives are at the end of the vertex/index buffers, removing the need for potentially several overlapping memory copys.

Its a complicated ass way to go about things, but at least it keeps things efficient. Who really wants to keep adding millions of points to a vertex buffer? Besides, in the engine, the default maximum vertex buffer length is 2 million, which means I’d be really pushing it to draw two of these graphs (unless I edit the engine source itself and update those numbers… and maybe update the index buffer to have a larger size than the vertex buffer while I’m at it!)

Sub updates

I had to go walkabout today to get a document signed and witnessed. That involved me walking to three or four different destinations. While I was walking, I noticed the same interesting energetic phenomenon I’ve noticed the last couple of times I’m out in public. I’m not sure if its the Hero strength aspects, the new Emperor resilience, or both, but there’s been a strong sense of solidity and noticing my muscles. Using them more. Also a different level of interaction with people.

After the walk, I went out again to see a friend. My friend had some associates with him who were not of the same caliber as him; one of them was clearly on something. They started bad mouthing him for trying to do something with his life (while the two of us were trying to have an ordinary conversation about running our own businesses), and the situation was going downhill quickly. My friend had control of the situation, but intuitively I knew when was the right moment to leave. My posture during the interaction remained relaxed and strong too. Hero and Emperor haven’t changed my predisposition towards being a non-violent individual, but it has made me calmer in troubling situations.

Also I think I’m noticing the resilience/ability to push through especially through physical pain/muscular tension more.

and besides I feel like the system I am developing, with callbacks which are completely independent of the windows themselves, gives me the flexibility I need to be able to eventually write any program with minimal setup.

and besides I feel like the system I am developing, with callbacks which are completely independent of the windows themselves, gives me the flexibility I need to be able to eventually write any program with minimal setup.

btw are you a software developer regularly and do game dev as a hobby?

btw are you a software developer regularly and do game dev as a hobby?

ugh, whoever said writing a data analysis app like this would be easy? Still, the more I get done before December starts, the better off I’ll be.

ugh, whoever said writing a data analysis app like this would be easy? Still, the more I get done before December starts, the better off I’ll be.