I added multiple texture atlases and a SpriteReference<'a> to make setting up new sprites a little less unwieldy. Its working, albeit a reasonable slowdown, but now I’m realizing rather than spending time on the details of the UI implementation and testing I need XrHandJointLocationsExt and XR_EXT_hand_tracking (and making sure it’s supported on the Quest). This would simplify things so much by allowing somewhat natural typing to be mimiced. Need a few hours rest before I start that though.

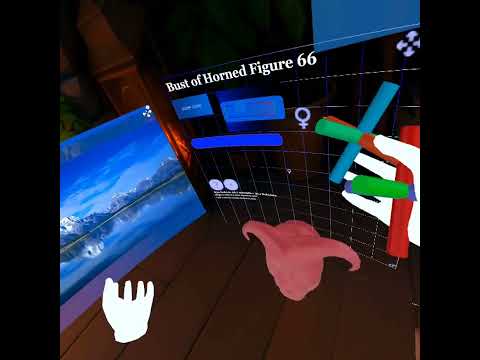

So I now have very basic hand tracking going… enough to draw my hands as a series of gleaming multicolored cubes and to draw lines on a 3 dimensional mesh plane. I just finished adding at 130 in the morning the concept and implementation of having both touch on and touch off functions for a joint. For my simple test I just calculated the position my finger penetrated the “screen”, and if i managed to move more than 10 pixels in some direction draw a line there. Most simplest drawing app ever. But it works. LOL. Tomorrow I test touch off functions and begin to consider how to process multi gesture/joint input (eg spread gestures or detecting pinch, stab etc.)

Continuing to be impressed by the speed of learning with IG

Things continue to evolve. I feel a little fucking loco right now, albeit nothing I can’t manage. Lots of business related stuff finally making progress in the face of it all… the huge task of setting up our gesture oriented windowing system in VR so that this app can finally be fully developed rather than toyed with.

Currently I’m focusing on implementing the spreading gesture, where the fingers spread out from a point horizontally or vertically. The idea is to scale or manipulate the surface spreading is occuring on. I figured out the easiest way to determinre spreading is to determine the angle between the linear velocities identified, projected onto the plane directly in front of you, or onto the surface in question. Then if they are within tolerance, probably about 15-20 degrees each side of the 180 degree center, ie if we’re between 140-180 degrees separation – at least the normalized to 360 degrees version – then the spreading action is executed. The linear velocities of the hand joints must be at least tolerance of 1cm/second, lower and it is considered crap.

I almost feel like heading out for a walk now and smoking up nicely while it rains on me heavily enough to soak me clear through. My brain is sick of dealing with mathematics Every night this week has been high energy intensity on the surface of my skin and meditating like I am a freaking Zen monk. Then waking, sleeping, waking sleeping until its 10 or 11am and well past time to get up.

I continue to be concerned about how slow things are going, and yet at the same time I know everything is at least somewhat on schedule. I need to continue implementing everything that is already differently implemented elsewhere. And hope things will speed up soon. Every day it seems I come across a new mathematical problem to solve. I hope eventually I will come to a point where I can get on with the proper implementation rather than the low level stuff.

So after two days of getting sent slowly insane by the fluctuating velocity vectors, I had a flash of insight. It came after I had spent an hour or two looking at different weighted averages of velocity vectors (including a histogram weighted one).

If the directions of the velocity vectors fluctuate due to uneven hand movement, the line from the initial centroid to the currently tracked joint position is one vector, and the line from the initial centroid to the other tracked joint is also a vector. I can get the angle between those two vectors and it is going to be less fluctuating, since the joint positions themselves remain accurate to within about 1.1cm, even if the velocity vector goes a bit crazy. So the more frames in which I see a near 180 degree separation after the initial recorded centroid, the more certainty I have that I am seeing a spread gesture. This ought to work at slower velocities too.

I was focusing on the direction the hand appeared to be moving in a given frame rather than focusing on the outcome of how straight the line is! Hopefully this will make spread detection easier. Going to test this later tonight.

Just goes to show, I do get flashes of insight still with IG.

So after getting into the wonders of finger occlusion, jitter and lens abberations, I decided to give the hand tracking a miss for now in favor of more stable inputs. Quest 3 is apparently doing better with it, but that will be a long time in adoption. In the meantime hand tracking is not well suited to small manipulations or fine motor movements, which rules it out for text input for now unfortunately.

But that was 6-9 days ago and the code continues to evolve. Today I implemented callback notification functions for sprites in my windowing system and started transforming the test program to ready it for GUI readiness. Right now I have the first component of my system being tested and I won’t know until tomorrow morning if it’s all good for me to move on to the next component. I’m hoping to have at least all the basic components implemented by Friday so I can write some more concrete code. It takes way too long to implement a 3D windowing system. Said every systems programmer ever LOL.

Happy with where I’ve ended today. The windowing system is still green, but its far enough along that I can focus on building components. I’m going to look at circular, sprite like objects next as well as the resize operation, and some swipe up and down gestures I was totally inspired into by some sci fi I watched, as well as some potential implementation for a ring style move-and-resize UX.

Status update.

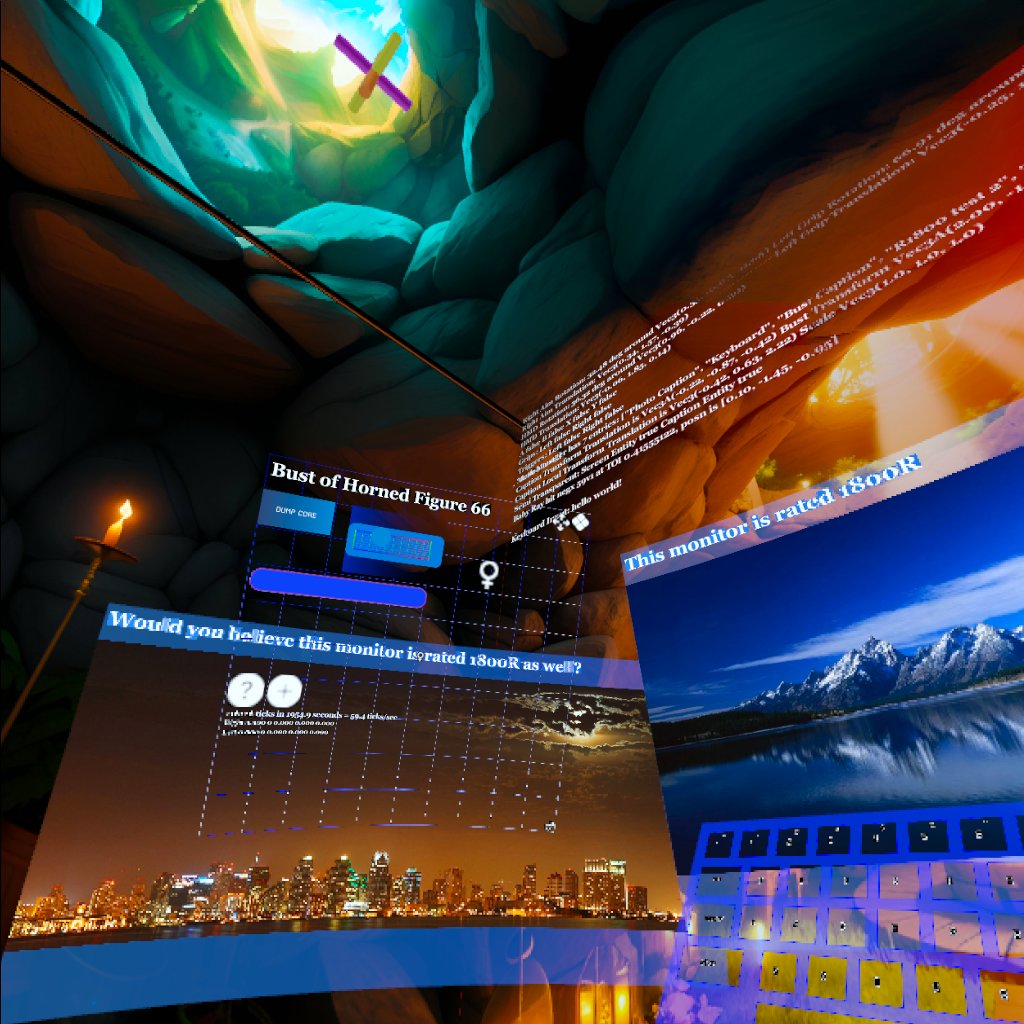

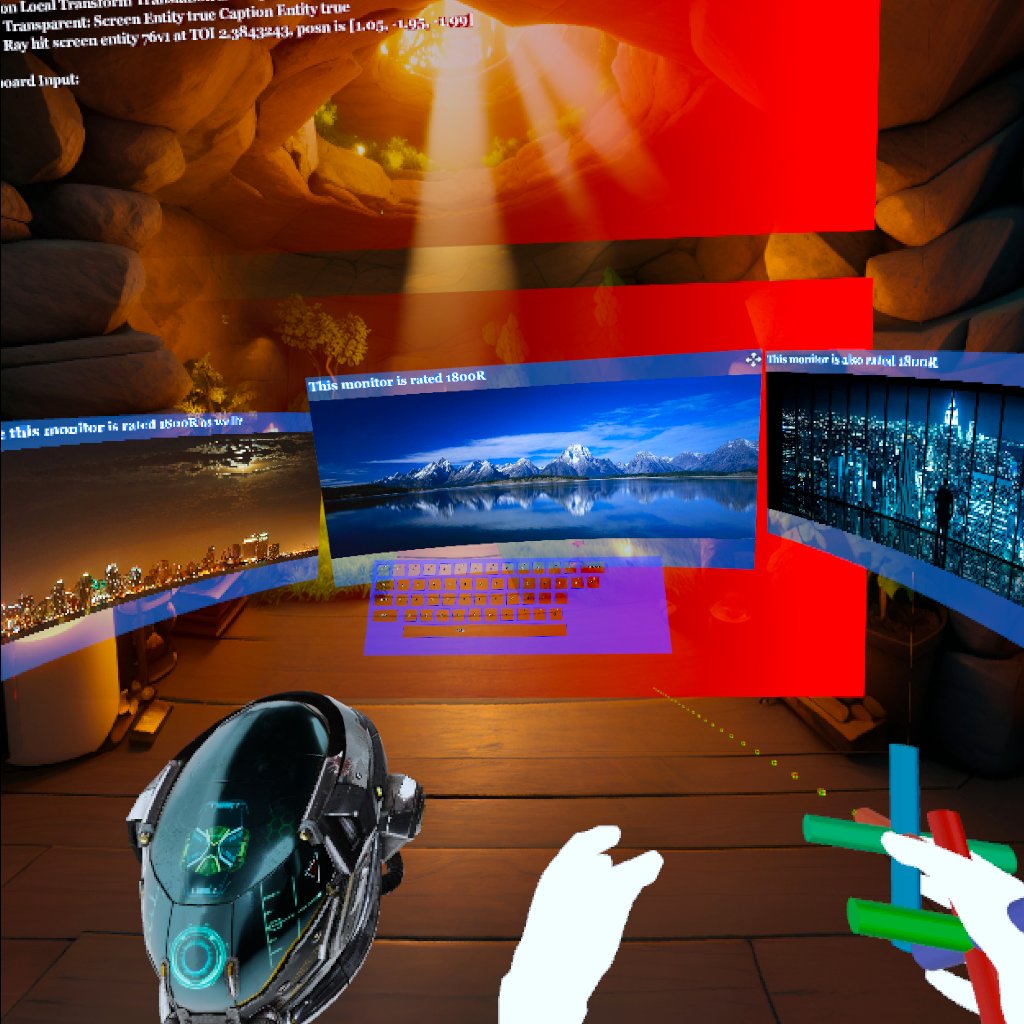

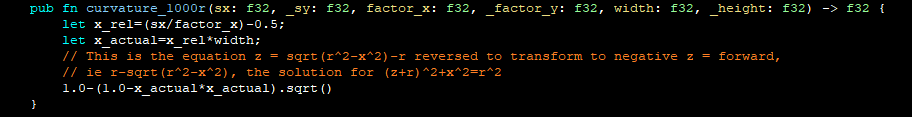

So first of all, I got curved semi-transparent screens working in my windowing system, with accurate R1000 and R1800 factors. Mind you, in VR, a 1m distance to a screen isn’t necessarily so relevant. I’m more likely to angle my transparent screen and lean back to stare at it. But it was a lot of work and I was very satisfied when I got it implemented yesterday. In order to make it work, I had to refactor the code for creation of meshes to have the caller send through an optional mesh options structure with number of segments on both axes and a curvature callback function to get different curvatures (or even funky shit like parabolic or lens like).

Basically the curvature of a curved monitor is defined in terms of the radius of a hypothetical circle on whose perimeter sits the monitor surface. So an R1000 monitor would describe a circle arc from the perimeter of a 1m squared circle. This means that to have a curved screen whose minimum curvature displacement is at the center and whose sides describe this circle arc, I had to rearrange the circle equation to solve for z in x^2 + (z+r)^2 = r^2 (a circle whose top touches the z axis) and then reverse the polarity for the coordinate system implementation.

That was the simple potatoes. Today I got started on the implementation of a slider which works over generics (as much as you can say Rust has generics). Here is where I saw Index Gate help (although not with the stress or emotional control).

For sanity reasons, my window objects have to be cloneable. There is 34 lines of code alone just describing the fields in the fricken thing. For the shader, I was going to need to somehow store in relation to the object some arbitrary data, which for future child mesh types may be any other generic type.

My first intuition was to store the objects in the entity component system. Alas! The ECS requires Component objects be Send + Sync. What does this mean? It means they need to be thread safe.

My temporary storage structure included optional structures which ultimately included raw pointers. The compiler looked at that and said… “nup! not happening!”

So after a lot of fucking around, and interrogating ChatGPT (and having it give me incorrect code a dozen times), I came upon a solution: Box<dyn Any>, or more specifically Box<dyn Cloneable>

But I’m getting ahead of myself. Box<dyn Any> does not satisfy the Clone trait. After attempting to implement my own clone type for dynamic objects I gave up and installed dyn_clone, a crate for cloning dynamic objects.

Once I had the structure allowing cloning, in the mesh creation function I created the Box::new(SliderOptions<T>) and have the object next draw the mesh’s initial state. To do that, it has to go from dyn Any to the concrete type on the stored value.

Well, dyn Cloneable by itself is not dyn Any, and a non primitive cast does not work. So I had to add functions to my supertraits as_any and as_any_mut to cast the dyn Cloneable to a dyn Any, which then let me downcast the structure to the concrete type.

So that took my entire day to figure that shit out. And now I am going to have to spend the rest of the night if I can stomach it implementing the draw function and callbacks for the slider so I can move on to color pickers, text boxes and the like… as many of those as I can get done in the next couple of days before I start implementing the menu structure for the real program.

Rather than accept that I can’t do something in Rust, IG tends to make me search for workarounds or previous solutions to my problem. And I usually find them in less time than I would have needed otherwise.

So, today’s implementations:

- Now have titlebars on the windows.

- The titlebar can be grabbed and moved up and down in the window. I currently have an implementation where the bar snaps back if its less than halfway down the page.

- Borders on windows on controller over (and removing the border when exiting the window)

- Windows now have a draw_buffer function which, you know, draws the buffer (its kind of self explanatory, kind of not) and tells Vulkan to update all the image buffers that need updating right before the rendering happens.

- The title bars can now be used to move the window around. So its a lot more capable of being like an ordinary windowing system, just with unlimited space and two mice

- Implemented functions to get back content from dynamic by-mesh storage without dicking around

- Fixed my touch off functions. I had the wrong method signature set up for all of them. Each of them now receives 10 parameters. Lmao. Let me iterate them. The mesh itself. The engine. The program’s state variable. The entities hit by the left and right controllers, if any. The previously hit sprite. The texture coordinates hit on the left and right entities (x and y) as i32.

All of this was done today. About to go have a walk at nearly 3am in the morning, and then tomorrow when I get up I finish coding and testing the sliders. Pretty happy with all that work, but my body is hella tired after it all. Hopefully I can keep it up.

Don’t know how long I’ll leave this link up for on forum, but here’s the next demo. Apologies for the shitty camera work, I wasn’t going for a Gold Logie or nothing.

So I guess I’m starting to stand on the shoulders of giants. I got some help with my procedural mesh generation code last night and this morning by one of the local members of the Discord I’m on.

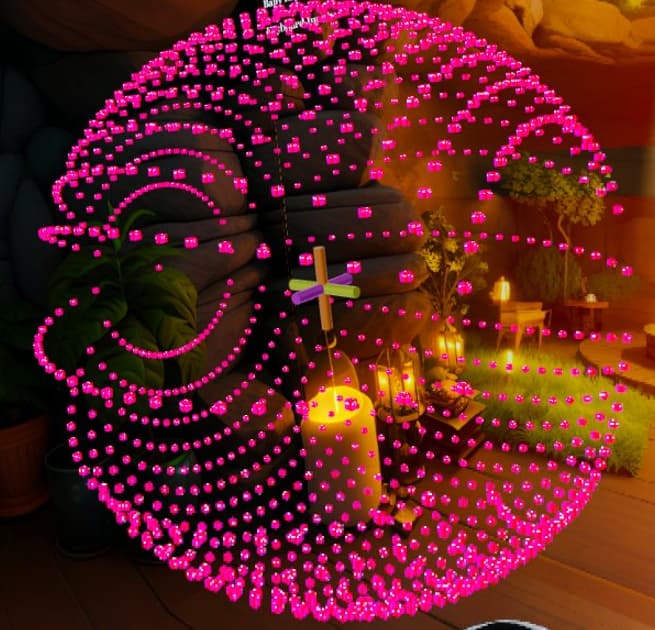

Originally, I had been attempting to generate an optimised sphere algorithm that avoided sin and cos in preference to square root, and getting a rather horrible output:

The missing points is due to the way the square root function falls of as it approaches zero, and the way my indices were being used. And I still had problems with index and position buffer generation because I couldn’t pre-calculate the next coordinates to come up with normals and UVs.

In the midst of mental anguish of biblical proportions where I was swearing like a sailor (and finding out that, surprise surprise, my program can’t handle rendering a scene with over 7500 textures in it at once well), I was told “why not rotate the points using a matrix?” and moreover, a 2x2 matrix at that. Well, 2 trig calculations and O(N squared) 2x2 matrix multiplications is way better than O(N squared) square roots, probably even with fsqrt optimisations.

Even better, although thats a lot of mul operations (4 per vertex), I had to calculate the ring radius squared and z coordinate squared and division of the z axis and x axis into segments beforehand, whereas with rotation by a 2x2 matrix I get rid of the need to do ugly floating point divisions for every point. And it means my loops are simplified so I can then deal with adding an outer loop reducing the number of normal calculations to only one per vertex rather than recalculating the normal twice.

Now I get to implement this today and get on with my job of coding the remaining features, rather than pulling my hair out trying to figure out how to optimise spherical coordinate generation.

I guess this goes to the mentors and associates manifestation scripting in Index Gate, and I’m already seeing the implications for the rest of my code-base, Raikov style. Gotta stop doing things the hard way!

Here’s another short update:

I need to add a seam to the sphere to get rid of the unsightly segment with a single UV coordinate. Also need to fix the orientation of the cylinder so it follows the axis being tweaked properly and maybe make it a little smaller so its not like I’m holding Monkey’s staff. And I need to catch touches on the 3 color bars so the components can be sampled without swipe up and down.

Swipe a little less intuitive than I thought at the moment thanks to the differing sensitivity of the 3 axes and the lack of a direction of movement right to left and front to back. But its a start.

I also want to add other color spaces other than Lab like Hsv. It shouldn’t add too many lines of code as the palette crate already deals with all the conversions for you. But there is little niggly details like the fact Lab has to take white point into consideration, and some color spaces are cylinders or cones rather than quasi-spherical. That means I’m probably going to have to add another layer of abstraction.

Index Gate / sub related

I tracked down and solved a rather nasty bug over the last two days. Well, more like a single day, but… my procedural mesh generation code was causing program data to be overwritten, which caused my program to think the parent mesh was some ridiculously large u32 in texture width/height with zero size, and made it calculate the square root of a negative number as a result.

It turned out that when the position and vertex buffers had to allocate more capacity, possibly due to my little tricks to get around the borrow checker in places, it assumed the memory for this mesh was free. At least, that’s the TLDR explanation. So I had to specify in my mesh generation that my sphere had 6s^2-3s indices and 2s^2+2 vertices where s is the number of segments specified. This ended up being a small performance boost anyway.

My thinking in tracking down the bug was initially chaotic – removing code or changing it subtly and recompiling to see if it fixed the problem. However as time progressed it shifted to what I’d call the Index Gate detective mode, where you start getting more in tune with the computer. I had that before IG, but I guess I’m starting to notice more when I switch between modes. I feel like Hero helps in potentizing IG by directing it towards learning the craft and having that calm, methodical almost zen flow.

Had a day off today largely. I started work on toroids but other than that it was shopping, snoozing, and watching reruns of the Expanse.

My procedurally generated toroid is a mess of triangles which I need to fix tomorrow. Getting the ordering of points right (CCW vs CW) is a hard thing for me when you combine it with trying to figure out the direction of rotation. Even I have off days sometimes.

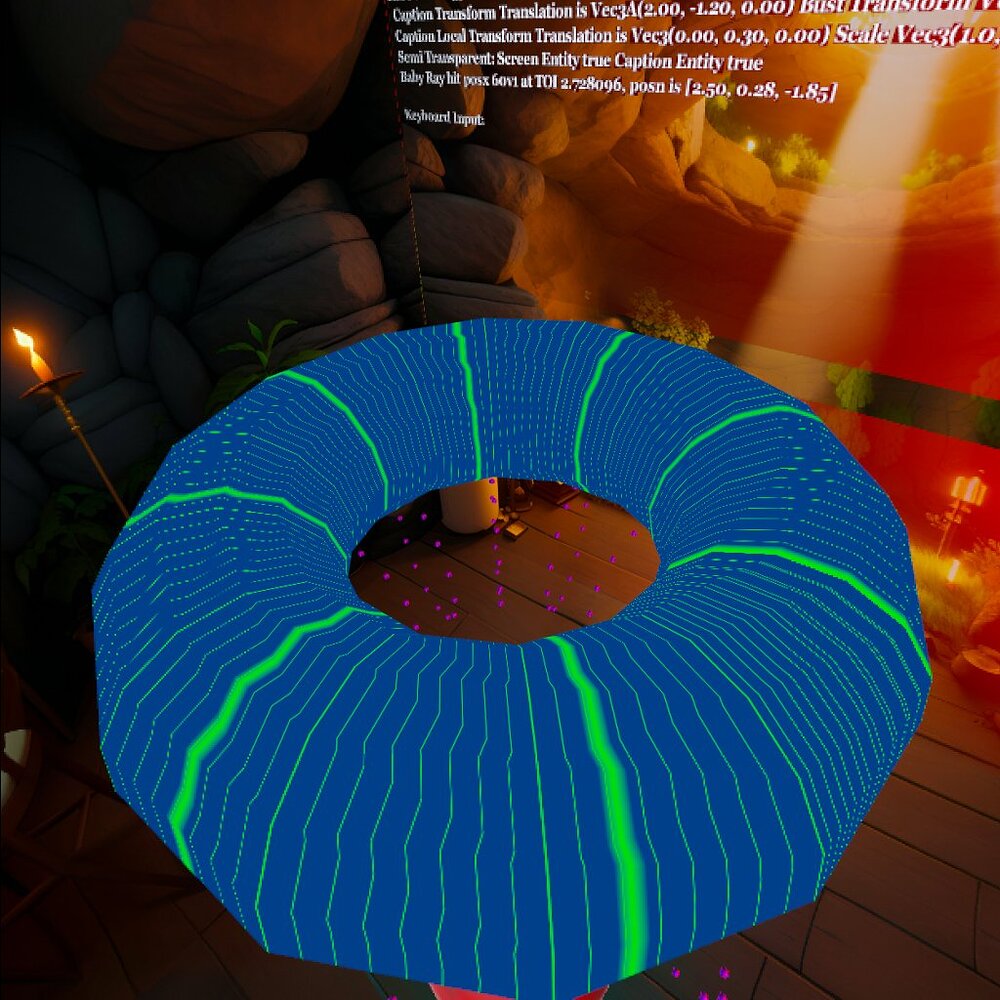

So I finally got toroids working:

I’m a little concerned the normals being defined here as the value at the point rather than the normal of the quad may have caused the problems with the grid lines being wavy, although it could just as easily be me using the wrong UV function. I’ll have to experiment a little to what works best.

This was pretty tricky to work out; the points of the toroid ring needed to be rotated first by the rotation of the segments of the circle, then by the rotation of the outer circle around its different axis of rotation, and then because winding differed from segment to segment I had to check the dot product of the averaged normals of the quad against the normal of the cross product of the sides of the quad, to determine which was CW vs CCW.

I’ve started using Bard for GPT queries. Bard still gets things wrong… A LOT. But its ten times better than GPT-3.5 and at least points me in the right direction sometimes. Slightly better than a broken clock.

Since I’m now one week away from my original proposed release data for something tangible, I’m going to switch now to implementing wrist menus. I’ve had a visual in my head for a while now of floating semi transparent circular icons above a watch-like screen, as well as parented sliders or tools to pop up upon clicking on each. Now that I have the capability to render semi transparent screens as well as primitives somewhat easily as well as dealing with parenting and storage, this should hopefully be fairly simple.

Today I got left, right, and centered justification of text in columns working. Although it’s too slow to run even using the raw pointer manipulation I’m doing now frame by frame without dropping the frame rate into the 20s, a little bit of glyph caching should fix that (well, a lot I suppose). I guess eventually I should move to just having the renderpass blit the text as sprites and precalculate the character glyphs into texture atlases.

Moving the text flowing to only running once to generate the two columns as sprites improved things significantly. With that addition and the addition of me turning most of my debug functionality off (that debug functionality also involved drawing further dynamic text every fricken frame), my FPS boosted to around 50-54 fps, even while calculating 66,000 color interpolations to unnecessarily re-texture a sphere every tick.

Target FPS for most games is 72 fps (although some say 60, and others again say 90) so really, if I figured out a way to move the text to pre-rendered glyphs blitted via a renderpass, it should be pretty easy to get the 1.5x boost I need.

The ab_glyph library is good, but I’ve probably been using it the wrong way. Generating the glyph outlines over and over again is not the most efficient way to use the CPU. I’m going to see how far I can push the optimisation in the next day or two before I move to the wrist menu. The speed boost is worth it to ensure users avoid motion sickness and making sure I have a reasonable margin for when I need to add mipmapping back in again.

Update #2.

Demonstrating text flow, those pesky toroids, a bit of how you navigate around the place, the double sided screens, and me testing the frame rate and whats chewing up the most ticks with an update on demand performance stats window!

Seeing this played back gives me hope I’ll get something of substance done by the end of the month.

I recently mentioned I’d started using Bard for GPT queries and it was better than a broken clock (compared to OpenAI’s horrendous ChatGPT 3.5 available for free which is worse than a broken clock for my type of programming enquiries).

Today my confidence in its abilities have improved further after a discussion of Order Independent Transparency. I thought it worthwhile to post the discussion here. Sub wise, I suppose this is an example of how IG has introduced me to better tools to do my job.

Background: I was recently made aware of a method of implementing Order independent transparency in Vulkan or OpenGL by using linked lists of fragments created using atomic operations available in OpenGL 4.2+, and Vulkan 1.2+ with extensions. This appears to also be demonstrated at GitHub - nvpro-samples/vk_order_independent_transparency: Demonstrates seven different techniques for order-independent transparency in Vulkan. and produces results that are free of problems arising from overlapping meshes.

My question: Are you aware of this methodology of using linked lists for OIT, and if so, are you aware of any benchmarks of this method compared to other transparency techniques?

Bard’s response:

Yes, I am aware of the methodology of using linked lists for OIT. It is a relatively new technique, and there are not yet many benchmarks comparing it to other transparency techniques. However, the benchmarks presented in the document you linked to show that the linked list algorithm can be very competitive, especially for scenes with a large number of overlapping meshes.

Here is a summary of the benchmarks from the document:

Algorithm Average FPS

Linked List 60.4

Conservative Rasterization 52.8

Early Z-Prepass 47.6

Deferred Shading 43.2

Forward+ 39.1

Forward 35.8

Blended 32.5As you can see, the linked list algorithm achieves the highest average FPS. It is also important to note that the linked list algorithm is more scalable than some of the other algorithms, such as early Z-prepass and deferred shading. This means that it can perform well even in scenes with a large number of objects.

Overall, the linked list algorithm is a promising new technique for OIT. It is worth considering for use in applications where performance and scalability are important.

Websites reviewed

As you will see, it actually reviewed the links available from the github (and presumably from Google search results) to give me actual benchmarks. I asked for some sources:

Thank you! Can you refer me to what document or websites provide these benchmarks?

Sure. The document that presents the benchmarks I mentioned in my previous response is called “Order-Independent Transparency with Per-Pixel Linked Lists”. It is available on the Cornell Computer Science website: https://discourse.panda3d.org/t/order-independent-transparency-using-per-pixel-linked-lists/28875

The document also provides a good overview of the linked list algorithm and how it works.

In addition to the document, you can also find information about the linked list algorithm on a few other websites, such as:

GPU Pro 7: Advanced Rendering Techniques: https://www.routledge.com/GPU-Pro-7-Advanced-Rendering-Techniques/Engel/p/book/9781498742535

Real-Time Rendering, 4th Edition: https://www.realtimerendering.com/

Game Tech Dev: Order-Independent Transparency Update: GitHub - GameTechDev/AOIT-Update: Adaptive Order Independant Transparency SampleI hope this is helpful!

That was impressive. I decided to test the waters further with a question that didn’t directly reference a website:

That is helpful. One further related question: You also mentioned Z pre-pass. I have come to understand that a Z pre-pass can further improve performance in scenes with overlapping objects by reducing the number of fragments . Is there any discussion of a hybrid method or the concurrent use both of these techniques?

Its response:

Yes, there is some discussion of hybrid methods that use both Z-prepass and linked lists for OIT.

One such method is described in the paper “Order-Independent Transparency with Per-Pixel Linked Lists” that I mentioned in my previous response. In this method, a Z-prepass is used to identify the fragments that are closest to the camera. These fragments are then added to a linked list, which is then used to render the scene.

Another hybrid method is described in the paper “Order-Independent Transparency for Deferred Rendering”. In this method, a Z-prepass is used to create a depth buffer. The depth buffer is then used to sort the fragments in the linked list before they are rendered.

Hybrid methods can offer a number of advantages over using either Z-prepass or linked lists alone. For example, hybrid methods can reduce the number of fragments that need to be rendered, which can improve performance. Additionally, hybrid methods can be more robust to certain types of artifacts, such as z-fighting.

However, hybrid methods can also be more complex to implement than using either Z-prepass or linked lists alone. Additionally, hybrid methods may not always be the best choice for all scenes. For example, hybrid methods may not be necessary for scenes with a small number of overlapping objects.

Overall, whether or not to use a hybrid method for OIT depends on a number of factors, such as the specific scene being rendered and the desired performance and quality.

Here are some additional references that discuss hybrid methods for OIT:

“A Hybrid Approach to Order-Independent Transparency” by Akenine-Möller et al.

“Order-Independent Transparency Using a Hybrid of Z-Prepass and Linked Lists” by Engel et al.

“Order-Independent Transparency for Deferred Rendering” by Bavoil et al.I hope this is helpful!

Goddamn, that is SO MUCH more informative than Chat jippity. And its ability to generate code seems to be better, for Rust at least. However, the code aspect is still not perfect (it makes shit up like ChatGPT; one example I’ll mention is generating code which passed render pass related information to ash::vk using the Some() pattern, which was a glaring error (you would never use Some() to pass data to a crate that calls a foreign function interface). Still, it has been able to generate example code, after some coaxing, that at least gives more believable subpass dependency information than I’ve got from OpenAI’s GPT.

Overall, I’m super impressed by the responses above from a clarity and technical point of view!

Well, I’m less and less impressed by Bard’s ability to code. And my last two days have been utter hell where I broke down several times over my attempt at depth buffering not working. I’ve even started to doubt my understanding of what a depth pass even is in Vulkan.

I think the problems are related to the differences between OpenGL and Vulkan. That and Vulkan’s complexity. Either way, I had my depth pass causing my code to crashing over nothing more than the attachment references I specified in my subpass creation. A completely empty main function with no out variables specified in the layout, and I could set the attachments to “unused”, I could set the field to null, I could turn on color writes or turn them off and the damned thing still crashed!

And when I was able to get something working, it ran at 1/2 to 1/3 of the speed of the code without the added depth pass. Eventually, frustrated beyond all belief, I gave up and commented out all of my second subpass creation and my commands to proceed to the next subpass, the bindings for the second subpass, and so on, leaving just the single subpass with no dependencies. And the code is working again, possibly slightly faster because of the lack of dependencies, but still with the transparency artifacts that I began this whole damned process in the first place for.

I’m tempted to leave further optimization for the moment because the stress I’ve had today was more than any human should have to bear. It shouldn’t be this difficult just to create a depth subpass!! And now I’m thinking… is the depth_test aspect of the depth stencil settings Vulkan’s equivalent of the depth pass? I don’t think it can be, because I keep hearing of other people discussing depth passes in Vulkan, but they just remain mysterious and elusive as ever, and I have not found one scerrick of code that implements multi view rendering with a depth pass. And the Discord was helpful the first night, but then subsequent people on the Discord point blank ignored my questions, ignored me even.

Perhaps for future projects I should switch to Open GL

I think this is why I’m hanging for NLE… Vulkan is so complex and this process of creating programs in VR too, that you really need something special to succeed as a small developer. I look forward to seeing what becomes available in the next couple of days.

I spent the last two days refactoring my code and figuring out how to get ASTC compression working. Since this is a bit of a doozy, I’ll describe in detail my process for fixing the problems I encountered, both as an assist to other developers, and as a demonstration of IG helping me with finding workarounds.

The Hotham library recommends using ASTC texture compression as a way of reducing the sampling bandwidth on the GPU for textures. Unfortunately, when I attempted an install of squisher, it failed with some random library needing Rust 1.70.1 or higher. So I git cloned the repository and cargo built the targets, and then had to recompile again when using cargo install while explicitly specifying the root folder of the pulled repository. That was the easy part.

The next problem was that squisher kept giving me a broken pipe error 109. This was because toktx’s astc support seems to be broken… for me at least. It consistently produced a zero length output file.

I began to wonder after examining the man pages for toktx and the newer ktx encode/create commands whether UASTC wasn’t the way to go. However, encoding to UASTC produced files with the format set to VK_FORMAT_UNDEFINED – super helpful (sarcasm) for importing textures into a program.

I then tried ARM’s ASTC Encoder, whose Github had recently had an update to release 4.6.0. However, while this encoder successfully creates KTX images which I could then change to KTX2 using the previously mentioned ktx2ktx2, it explicitly did not support mipmaps.

Now, having no mipmaps is kinda useless, the whole reason you would want to encode an image in one of these formats is so you can use it on the GPU in rendering a scene!

I went back to the toktx problem and asked Bard if it was aware of a way to specify the format. It told me there was a known issue which people were waiting on a solution for, and offered a workaround of using one of the many other texture formats that wasn’t what I wanted. Wonderful if it was true. However, Bard’s response was… lets just say, a big fib.

When I asked it for sources for its assertions, it cheerfully provided me, over the course of 3 or 4 responses, with 3 or 4 non existent Github issues. It claimed there was a Github issue #111 which they were tracking the problem on. However, this was a lie. Issue #111 on the tracker was closed and was related to an entirely different issue. It then told me it was issue 141, then issue 724 or 726… neither were related to my problem in any way.

When I pointed this out to it, it apologized profusely and then told me again to wait on a solution to the non-existent Github issue in the same response.

I gave up on Bard for a solution to the problem. I went looking for other free solutions other than the ARM ASTC Encoder and KTX2, which Google was confidently telling me were the only ones that supported ASTC. I came upon a post about ASTC support being removed from another program. However, an old version of that program which still supported ASTC was still available.

AMD’s Compressonator supports multiple formats. And it can encode and decode on the CPU if necessary, which was necessary on my ancient 2020 Intel laptop with a GPU older than the hills. Version 4.2.5185 supported ASTC, and then support for ASTC was removed in the next release. Thankfully, old releases are still available on their Github.

Now, even with a full mip chain, many textures still glitch like a bad acid trip. Its possible I need to again tun on anisotropy or increase the LOD bias. The problem is that if you have a tiled floor or even a box in front of you which has any level of intricacy to its texture (say, a wood grain for example), as you get even 5 metres away in virtual space, you begin to experience the dreaded z-fighting. Why?

Well, if you’ve ever looked at the input coming from a head mounted display, while the translation information is reasonably stable, on the Quest 2, the rotation axis goes up and down like a yoyo. Within very small limits – about 0.01 to 0.03 on an axis, but its doing this constantly. When you are redrawing the screen each tick, if you are taking your views from the HMD, each time the GPU samples your textures it will be getting a slightly different result.

It’s significant enough that, on my headset, any lines that are a single pixel in width will break out into moire patterns, or will break apart into dashes, until you get so close to the display that it’s like ur trying to kiss the screen.

Now, I believe the problems are aggravated in Hotham because its PBR renderer uses f16 floating point arithmetic to speed up the render. However, in the interface, it takes its views from OpenXR, which provided the views for each eye as f32. And those f32 views are themselves fluctuating by these tiny amounts because of the limitations of the hardware.

Here’s a GIF to demonstrate the problem.

When you combine these factors, trying to get a stable image is incredibly tricky. What I’ve noticed is that a lot of games force the user to lock in a very limited range of views. That helps, because then you can use tricks. You can make any font you display to the user super big so that it doesn’t glitch when its too far away. But I think the real solution is to granularize the data coming back from the HMD just enough to reduce that shaking, and then use mipmaps to make the rest of the problem go away. And they need to be very carefully generated mipmaps, using a method that produces the least distortion for the type of image you’re shrinking.

So that’s where I am at currently. The refactoring let me take out the old floor and a few of the props which were kind of non relevant and get some practice at generating and texturing objects on the fly. I’m glad that with the help of IG, and God, of course, I managed to find a solution to the problem of ASTC support sucking in virtually every major popular program. I really do suspect the problems with toktx are due to my GPU being ancient as the hills. But its irrelevant now.

Another thing I learned about with respect to VR is a feature called asynchronous spacewarp (and its close cousin asynchronous timewarp). It’s normally enabled automatically on the Oculus Quest 2 on apps that don’t manage to make a 90Hz refresh rate, to enable head rotation to supposedly be more smooth.

What it does is takes the frames of video it’s received previously and extrapolates new frames from that if the application is struggling to keep up, so that the user experience is smoother.

Well, that might be great for certain applications, but as you would expect in conjunction with that weird glitching the HMD’s rotation makes, it has the potential to introduce even more noise into an image. Apparently, Oculus recommends rendering head locked content like HMDs in their own layer and using the compositor.

Now that I’ve started looking for them, the moire patterns are everywhere, just well masked. It seems I’m starting to become aware of the tricks developers use to get around the problem.

Even in games made with the Wonderland VR engine, you see these. Worlds Demolisher is a good example of a game which at first glance is really smooth, but on the Quest if you look closely you’ll notice lines glitching, especially where there is a sharp transition between colors.

I’ve started noticing how some engines like Wonderland (or people building with it) will blur objects in the foreground or background that might otherwise glitch. Like in the previous game I mentioned, the gigantic planet/moon in the sky, if you look at it, is comprised almost entirely of smooth color gradients. The ball, when its moving, is blurred. The pointer on screen is clearly drawn on in such a way that it is fixed, its not a 3D object, its just pixel manipulation, so its less prone to artifacts.

I still haven’t learned how to turn ASW off – apparently you can do it through the bog standard debug app, but I’d really love to be able to just run an adb command to turn it off. Probably not gonna happen though…