“an invisible mississippi, ganges or a nile

I can feel the quiet river rage

forcin’ my lips into a smile

don’t believe that the world is empty

just too noisy to hear the sound

I can feel the quiet river rage

and I’m fallin’ down

saved by the river now…”

– Live, Feel the Quiet River Rage

Spent the last 6 hours or so writing a tutorial for the game library I have. Its probably about 75% done to my liking, I still need to add stuff on openxr input, a little more on compilation and environment variables and code signing.

Next thing I have to do is deal with font layout including ascent and descent, kerning, advance and bearing, and that loop to update the content of the texture only if it changes. And then start building my app interface using maybe egui or some other library for layout. Its now at a point where I can actually get a private repository started for the code.

Edit: got ascent and descent and horizontal advance working so the font is now proportional and aligned with the pixel position I specify in the draw call. The only problem is that, even ignoring coverage as zero pixels, I get black pixels rather than the background color within the glyph bounds. So I probably have to interpolate between the two color values based on the coverage value, which means converting the color values to f32, doing the interpolation and then converting back. At that rate I might as well be doing gamma correction. maybe I can create one draw routine to interpolate from the buffer and one to interpolate from a fixed background color.

Oh the endless boring possibilities, LOL.

Another successful day, I started before 9am for a change if I remember correctly, and ended around 4.30pm. I may still do more work on my tutorial. The events of last night were unexpectedly fortuitous and I am determined to keep tweaking and tweaking this tutorial in the new format until it is perfect. I definitely feel the GLMC competitiveness or perfectionism, whatever you want to call it, the desire to excel in all one does.

Last night I got the pixel interpolation working faster than you could say “linear algebra” and the results looked very nice. I got a very positive response from my mentor earlier today to my last update and it cements my confidence I can just keep moving forward and get there to a successful business, even if it takes me a month or two more than I expected to get a successful app out. Just as long as the war doesn’t destroy the desire and capacity of individuals to engage in using VR technology (and I know there are people that will keep pushing that until the nukes are flying…)

Today slowed me down a little with the tutorial because I decided to do a full explanation in depth of the navigation system built into the example programs. I got to the very end of the explanation and for the life of me could not figure out why multiplying by the inverse of the grip matrix was giving the correct result in terms of rotating the correct direction rather than its opposite!

I took a walk to go see a friend to stop my mind from focusing on the problem incessantly without resolving it.

When I got back, I decided to take on testing the performance of the dynamic texture updating on the Quest 2. I ended up with 6+ strings drawn into the buffer and a clear screen routine to draw a gradient into the buffer that should have been horribly slow, but the text was updating so fast that when I looked closely the numbers as they changed were blurring into one another because it was too fast for the eye to keep up. The headset didn’t even get more than slightly warm with me polling the controller every frame.

Very impressed with how fast this example program is. I don’t know whether to ascribe that to rust, vulkan, the Quest 2’s hardware, or all three.

Reached a stopping point with the tutorial content and now I can take a rest and reflect on what I need to do for the app I had originally planned before my next coding session.

I feel I’m ready to work on app content now that I have enough of an understanding of colliders, physics, models, transformations and so on into infinity. Now its really just going to be down to my creativity and I can finally start to better grasp what IG UPX can do for me.

Spent the morning examining the code to the customer rendering example in HH. Now that I’ve had a chance to look at it from the perspective of eyes that aren’t tired out from pattern matching on a dozen other things, I can understand how that is working a lot better.

I can see how the customer render system just sets up its own pipeline, sorts the quadrics and the ordinary meshes out from one another, binds the different pipelines depending on when the instanced primitive’s associated shader changes, and basically handles all the primitive manipulation of the Vulkan commands to ensure the primitive is drawn with the correct pipeline. So it’s more complicated than I originally thought. But still something that should be able to be implemented into an ordinary program without much complexity. I think this is my final thing I need to play with to get working before I continue working on the app. Just because semi-transparent meshes are a thing I probably need rather than relying on a shader which discards the alpha channel value.

I made several updates to the tutorial today to cover all 14 systems that can be run during the tick loop of the game engine, and to cover colliders, and various aspects of my example code. Pretty happy with how far I’ve gotten in no more than 5 hours if that. Going to continue working to add more to it gradually over the next couple of days before I finally send a PR to the responsible individuals.

Despite how this is slowing down my app development, I still feel that this is a necessary step not just for marketing but for getting my own thoughts clear and straight in order, to ensure I move forward with my app as easily as possible.

Getting some good manifestations of resources for my learning. Found this tutorial which goes into the level of detail I felt I needed to understand how pipelines and descriptor sets etc all fit together.

My current plan is to summarize the existing graphics pipelines created by the render context and the gui context, and then elaborate how these can be mixed with separate buffers for the color blending stage I’m going to add as my example custom render example. I also need an internal summary of where the code sets up the physical and logical devices and command buffers, so I know what we have to work with.

Not a lot to report over the last couple of days. Kept consuming tutorials on Vulkan to the point where I’m basically sick of them. I feel like I’m at a point now where I can almost articulate what you need to do to implement a custom render pipeline to work in tandem with the existing pipelines; tonight and tomorrow I need to implement that and test it on my headset.

I had wine last night because the intensity of my thought trying to force my understanding to coalesce was almost unbearable. Sometimes for me it feels like I have to blunt the sharpness of my own thoughts to be able to handle them. For the last couple of days I’ve felt like I’m getting ready to birth a potato through an orifice the size of a snow-pea. It’s a really weird sensation, I guess the closest I could come to explaining it rationally would be that an undertaking like this requires a hell of a lot of mental bandwidth to be able to track all the different moving pieces. Holding all the pieces without it all collapsing is a juggling act I find difficult in my old age, and that probably has a lot to do with all the other things I’m tracking in the background that I wasn’t needing to several years ago.

My findings today are starting to cause things to make sense, but its a slow process of trial and error and perhaps my attempts to avoid errors are preventing me from getting started with the trial aspect of things. What I discovered today is that you only need to rebind descriptor sets when you bind a new pipeline if the two pipelines do not use the same bindings. And there is a difference between binding or specifying the attachments to a pipeline vs specifying the descriptor sets themselves.

Meh. Anyway, IG UPX is doing its job, I just feel like my brain/body is struggling to keep up with the demands. I suppose I did back off on my supplements recently; time to stop that and chow down on the choline/ALCAR again so I don’t feel like I’m being poked by needles with every sharp thought.

Made some good progress today. It seems like it’s always just a little progress, not as much as the perfectionist in me would have liked, but I find myself a little more satisfied each time.

What I understood today helped to tie a lot of stuff together. Basically:

- The creation of a Vulkan graphics pipeline requires specifying a render pass

- The render pass contains all the information about input attachments to the render pass and what will happen to all the attachments after the render pass. That includes frame buffers

- The GUI code I was reviewing creates an empty texture for each GUI Panel and renders into this texture as a framebuffer, setting a blend mode on the pipeline so that the framebuffer is properly blended into the final swapchain image

- The other custom rendering example I was looking at basically directly copied its parent’s render pass with its attachments, which is why it was able to push commands into the same render pass’s command buffer and get away with it.

- Basically the missing step I wasn’t getting was the need to allocate a framebuffer for a render pass to draw into, and how that relates to the final output.

So now that I understand all this, I should be able to finish my own custom rendering example and test it on the headset this weekend. And then complete the tutorial I’ve been writing and push its contents out to the relevant parties to examine and pass judgement.

Its taken me an entire week of straining to understand this horribly complex set of convoluted structures necessary to create a graphics pipeline. I hope its the last time I need to deal with structures that complex!

It hit me an hour ago why the developer said in his code comment “TODO: This is terrible”. With regards to an incredibly long pipeline setup command in the GUI code. It only just dawned on me an hour or so ago that the dependencies set within the code in two different places to ensure the GUI code is deferred until after the input color attachment is completed, and how the dynamic state and scissoring played into why the whole thing worked. And then it dawned on me how any code struggling to do custom rendering would have to integrate with this and create its own memory fence, and how the flow of data moved through the different render passes.

And it was like “oh my lmao magic! and i totally get the joke too!”. And it tells me exactly how to approach this tutorial now. LOL

To clarify, it was a total IG UPX moment. My mind kept pulling on the thread even after I had stepped out for a walk, and then delivered the bombshell gradually. It wasn’t that the code was bad, but that to set it up so multiple UIPanels could have a renderpass each and dynamically update the screen as they do using Vulkan’s dependency definition syntax is more than a little bit crazy making, to misquote Morpheus. And I was able to see more importantly how any series of renderpasses executed within a program using this library will need to harmonize the renderpasses using appropriate fences or rewriting of the primary shaders or some other solution who knows what. None of which is good or bad, but is clarity and tells me exactly how to approach the rest of the tutorial with the help of UWX.

Alright so I finally submitted a pull request for my tutorial to date tonight. I think I’m doing reasonably well on explaining everything so far, crunch time will come tomorrow getting my code to work with the existing dynamic mesh objects I created in my earlier example. If I can get semi-transparent meshes blending on a second renderpass, I should then have enough to create wrist menus, which means I can finally continue with writing an app rather than figuring out how a game library works LOL.

I briefly considered other purchases this weekend for PCC or LoTS. Try as I might, I couldn’t justify it. I’m pretty impressed by the possibilities of PCC so far, but not convinced enough that I’m going to sacrifice almost $45 of our local currency to ruin my existing stack when I could just simply have faith that my own G-d will help me deal with manipulators in an efficient manner. I might change my mind as I get closer to having a finished product, who knows.

Began the process of implementing the custom rendering today.

There’s still at least a couple of days to go in what I have to do.

Needed to allocate my own buffers for the framebuffer used in the render pass. All the position, vertex, material, index and skins buffers assigned to the render context object. All information for the pipeline creation set up done except for the renderpass itself.

There’s the topology of the input, the viewport state, the vertex input state, the rasterisation state, the multisample state, the depth stencil state, and the color blend state. Shaders compile without errors. Now I just have to specify the input to the renderpass in terms of its attachments, subpasses and dependencies.

Except I suspect its not quite that simple. I don’t understand how the separate passes work together to create a single set of images. I keep reading over the original code and just getting more confused. I suspect I have a tutorial or two more to watch before the confusion is removed.

Figured out last night that I can just use the pipeline layout from the PBR render context and piggy back off the same render pass but using a different pipeline. So today I got 50% of the custom render code out of the way and compiling (but not tested yet).

I have a good mind to just continue through tonight to get the remaining 50% written into the implementation and then test it. There are a few minor points I still need to sort out like why the existing shaders are using 16 bit output for the colors and can this be changed, but other than that by tomorrow I should be fully in the process of testing the custom render code and finishing the tutorial.

Definitely feeling some recon at the moment. Not sure if its just stack overload or what it is.

The idea from the other night of using the same pipeline layout as the other render pass failed. I figured out I’m going to have to create my own framebuffer to house images and image views for the render pass/pipeline I’m writing separate from the existing pass, because of the blending requirements and the weirdness of how that pass is set up. So that means more work, but at the moment I have been having some major anxiety around getting that code done in a timely fashion or even testing it properly. The upcoming mentoring session probably adds to the stress of the whole situation as I’m further behind on my schedule than I would like to be.

I know I’m probably overreacting and the stress is probably multiple factors like recent solar flare activity, increasing energy levels without enough harmonization, and a subtle reaction to the stuff going on behind the curtain at the moment on the global stage. But that doesn’t make it any easier to manage. Chemically, I have at least crutches I can rely on including various amino acids and pills like tribulus, resveratrol, msm, vitamin d and pretty much anything known to man that would help. Also I keep getting drawn to that body meditation, but not just “without judgement” focus on body part parts, but strong, rock solid focus over a sustained period of time. I also now have this anointing oil which is a nice psychological calming mechanism.

I think I’m just going to have to keep up the self care regimen this weekend and hope things improve. Nothing to be gained by overanalyzing it. But I thought it was worth noting down to try and pinpoint stuff later.

OMG… the possibilities.

This kind of blew my mind. Basically code that is executed at compile time to generate a constant or various types of behavior.

Kind of a moment of “the beauty of code” watching that, I feel like I’ll probably need another watch to fully appreciate the possibilities. Kind of makes the weekend time off of writing the necessary code feel worthwhile. Have been absorbing ideas of creative beauty which appear to give potential routes to take with the software once this proof of concept with Vulkan is completed.

Today I managed to get a successful render pass working. technically it was working much earlier in the day; but it took me a while to adjust the code to render directly into a texture to see the results.

I set up a framebuffer with a single color attachment in it. This color attachment I eventually switched from being a vulkan_context.create_image call into passing in an empty texture created outside the custom render context’s instantiation function. The original framebuffer creation had included a depth buffer, but that crashed with a segfault and it wasn’t until tonight I figured out how to enable validation layers to figure out what was causing the segfault.

I’m binding the vertex and index buffers from the original render context, and other than that the descriptor sets from the original PBR renderpass are bound so that the shaders can read the same data. I drew a single object into the render pass/pipeline and it appeared as expected in the half sized mesh plane I set up which uses the framebuffer object as a texture.

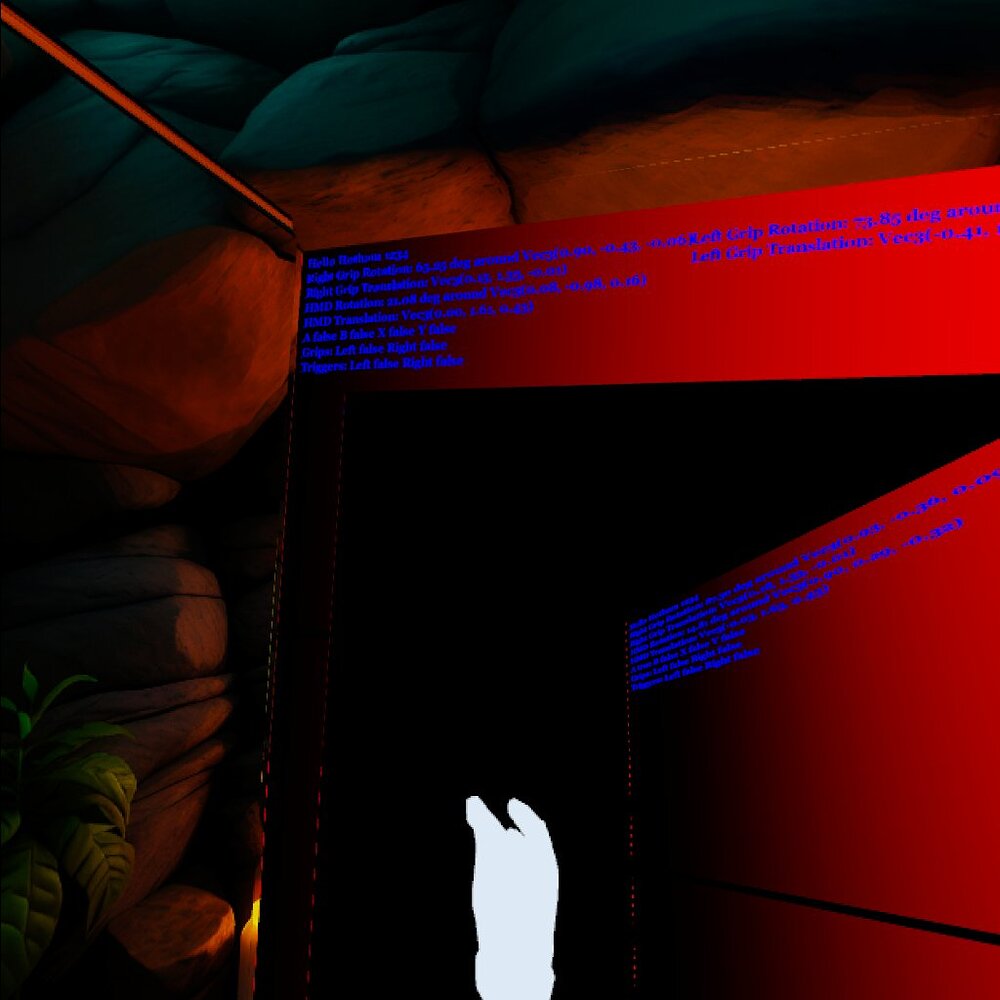

All this probably sounds like trash, so here’s an image:

This shows the mesh plane which I drew on originally showing up on the back wall, with the same mesh plane rendered according to where my eyes were looking, on another mesh plane. Basically, the smaller version shows up when I have that mesh assigned as “semi transparent”, the larger version shows when I transition it to being rendered by a different render pass.

TL:DR; this was a big win. I also found out from other people that Vulkan Validation Layers can be enabled without special action. https://developer.oculus.com/documentation/unity/ts-vulkanvalidation/

Take a look for the keywords in that post via Google. I guarantee you you won’t be able to find it via an ordinary Google search

Well, it took longer than expected to get this working, but I now have a renderpass rendering the entire set of meshes into a window on the screen, including my mesh plane with the transparent pixels, and transparency is being respected. Now all I need to figure out is how to set up a render pass that deals with multiviews and MSAA and render into that original framebuffer.

So now I can finish my tutorial. The problem with the depth buffer crashing the program earlier turned out to be a pointer that didn’t live long enough to be used by the call to vkCreateFramebuffer.

Once the tutorial is done, I’m going to put it to bed so I can focus on my app finally.

I feel like summarizing this journal to this point for people stepping in late to the party. I started this journal June 19th this year, 2023, 10 days after I unofficially began on the course of my current business plan.

This journal was always meant to be more of an occasional record of my experience creating my first couple of Rust based VR apps. I had a few key posts throughout the 3 months I’ve had it active so far and perhaps at some point I’ll put together an index. There was a few more personal posts than I would like and a few more “this is all that’s going on right now” status updates than I should have made.

But basically so far:

- I’ve reached a point of technical proficiency with Rust and Hotham where I’m not tripping over myself trying to figure out how to do something graphically.

- I already identified some key tech earlier on like tokio, polars, and so on that I plan on making use of

- I’ve very gradually become reasonably proficient with adb since starting with development

- I’ve learned how True Type/Open Type fonts work and how to display them on screen

- I’ve learned how to manipulate textures and upload them to the GPU

- I’ve learned how to create a mesh plane and automate the update of content to it via a renderpass or a direct manipulation of data in memory

- I’ve learned how to manipulate the existing shaders and passes to render a version of the world that respects transparency

- This last fact has opened up amazing vistas that I had been waiting almost a month now to gain access to. Once I fix the framebuffer to ensure my current picture in picture view renders to screen instead, I will be able to create popup textual hints in precisely sized fonts, hovering above the surface of a mesh or prop, and I will be able to create semi transparent (both translucent and fully see through) menus which react to the individual’s pointers/hands. I’ll be able to hover these on top of other props.

- Basically the ability to respect transparency on overlays was the last technical hurdle; now the fun part should begin most likely from next week. Tomorrow is going to be wrap-up and recording of existing lessons.